Introduction

Dependency management isn’t anything new, however, it has become more of an issue in recent times due to the popularity of frameworks and languages, which have large numbers of 3rd party plugins and modules. With Node.js, keeping dependencies secure is an ongoing and time-consuming task because the majority of Node.js projects rely on publicly available modules or libraries to add functionality. Instead of developers writing code, they end up adding a large number of libraries to their applications. The major benefit of this is the speed at which development can take place. However, with great benefits can also come great pitfalls, this is especially true when it comes to security. As a result of these risks, the Open Web Application Security Project (OWASP) currently ranks “Using Components with Known Vulnerabilities” in the top ten most critical web application vulnerabilities in their latest report.

Introducing Risk through Rapid Development

The over-reliance on using 3rd party modules to implement functionality in your applications can cause vulnerabilities to unknowingly creep into your applications. Some of these vulnerabilities can appear out of the box when you install the module. For example, the popular shelljs module at the time of writing has had over 481,000 downloads in the last day.

However, installing this module will introduce a command injection vulnerability. This affects every version of shelljs from 0.0.1 to 0.7.7.

ShellJS Version 0.0.1

ShellJS Version 0.7.7

This is a cause of concern when considering that there have been over 11 million downloads in the last month alone. Furthermore, other popular and well-known modules that recently contained vulnerabilities include:

Angular@1->1.6.2

Express@4.14.0

Request@2.67.0

The Problems

When a user does an npm install of a module and has an entry created in the package.json of their application, it can often be left to remain at that version unless a developer requires functionality that only exists in a newer version of that module. However, as time goes on, vulnerabilities can be found by researchers or in the wild in these outdated versions. Therefore, it’s important to monitor dependency vulnerability creep.

The Solution(s)

If old modules can become prone to vulnerabilities over time and the latest module versions could potentially be free of vulnerabilities, then we could actively update all of our modules’ dependencies once newer versions of them are published. However, this can involve a lot of work and repetition, particularly where there are a large number of dependencies in each application.

There are a number of tools available that help you keep your dependencies up to date, one of them is Greenkeeper.

Greenkeeper is a tool for dependency management and provides GitHub integration. Greenkeeper can be configured to automatically update your dependencies to their latest versions without any manual intervention. Greenkeeper will also ensure that it doesn’t introduce breaking changes by running npm test before merging in dependency version bumps, so you can rest assure that stability won’t be affected. Greenkeeper provides a great solution for automating the tedious task of dependency management with a minimal and straightforward setup.

Whilst Greenkeeper and similar tools are a great option for ensuring that you are using the latest versions of your dependencies, it doesn’t ensure you are using the vulnerability free versions of your dependencies.

The main issue with these types of tools can be described by using the npm module Hawk as a use case.

- A developer installs hawk using

npm install hawk --saveand it adds the entry"hawk": "3.1.3"to the ofpackage.jsonthe application. - The developer then decides down the line that they should introduce dependency management automation to their project.

- When Greenkeeper analyses the application and notices that there is an outdated version of

hawkbeing used in one or more of the applications, Greenkeeper issues a fix and updates it to the latest available version"4.0.0".

While this may seem to be a nice automation improvement, it can also backfire….

Hawk Version 3.1.3

Hawk Version 4.0.0

Spot the problem?

Remember, the latest version is not necessarily the safest version. Vulnerabilities can still be introduced in new versions. Is Greenkeeper or a similar solution the answer to keeping my dependencies vulnerability free? The short answer is, unfortunately, no.

The Better Solutions

![]() NSP (nodesecurity.io)

NSP (nodesecurity.io)

The first security focused dependency management tool I’m going to talk about is NSP, which stands for the Node Security Platform. They provide a command line tool and some very nice Github Integration. There is a service called nsp Live that is free for open source and it allows for real-time checks with CI support, along with GitHub PR integration.

NSP provides easy Github Integration and prevents you from introducing vulnerable versions of Modules in your applications.

After installing the command line tool, you can check for vulnerabilities in your project by running nsp check --output summary. You can also output to JSON format if you would like to use the data in other programs or tooling by using --output json. The command line tool is very simple, but it does the job of highlighting vulnerabilities in your Node.js applications.

Pros

- Free for Open Source.

- Github Integration

- Useful Output Formats when Using the CLI tool.

- Add vulnerabilities to ignore.

Installation

Usage

nsp check Test for any known vulnerabilities.

![]() Snyk (snyk.io)

Snyk (snyk.io)

Snyk offers the best of both worlds. It has a feature-rich command line tool, along with an excellent web application that provides a great UI for finding and reviewing security vulnerabilities. Just like NSP, it also provides great Github integration and checks for new vulnerabilities introduced through pull requests. The command line tool provided by Snyk will not only report vulnerabilities in components, but it will also offer to fix them with their wizard tool. Moving from the command line to the Snyk website, it’s easy to test NPM modules for vulnerabilities, view a dashboard listing the vulnerabilities in your current project and configure your Github settings.

Snyk provides an interactive command line tool to easily mitigate vulnerabilities in your applications.

Snyk does a good job of creating a great end user experience, along with providing very feature rich tools and integrations to help keep your dependencies vulnerability free.

Pros

- Free for Open Source.

- Excellent Github Integration.

- Feature rich Website.

- Provides an easy way to test a public npm module & version for vulnerabilities.

- Email alerts for new vulnerabilities.

- Automatically open’s fix PR’s if a fix is available.

- Sleek dashboard for viewing current vulnerabilities in your projects.

Installation

Usage

snyk test Test for any known vulnerabilities.

snyk wizard Configure your policy file to update, auto patch and ignore vulnerabilities.

snyk protect Protect your code from vulnerabilities and optionally suppress specific vulnerabilities.

snyk monitor Record the state of dependencies and any vulnerabilities on snyk.io.

![]() Retire.js (retirejs.github.io)

Retire.js (retirejs.github.io)

Retire.js is a very thorough vulnerability scanner for javascript libraries. Although Retire.js doesn’t provide the web application features or Github integration like Snyk or NSP, it makes up for it in other ways.

Along with finding vulnerabilities in Node.js modules, it also scans for vulnerabilities in Javascript libraries. This is very useful in finding vulnerabilities that you didn’t realize existed if you previously only used NSP or Snyk to secure your dependencies.

Retire.js is run primarily using their command line tool, but it can also be used in a number of different ways:

- As a grunt plugin

- As a gulp task

- As a Chrome extension

- As a Firefox extension

- As a Burp Plugin

- As an OWASP Zap plugin

Pros

- Completely Free!

- Very Versatile and Thorough Scanner.

- Reports vulnerabilities in Javascript libraries, not just Node Modules.

- Plugins are available for popular intercepting proxies and penetration testing tools.

- Their website captures vulnerable library versions concisely in a table.

- Extensive scanning options: paths, folders, proxies, node/JS only, URL etc.

- Add vulnerabilities to ignore.

- Passive scanning using the browser plugin.

Installation

Usage

retire --package limit node scan to packages where parent is mentioned in package.json.

retire --node Run node dependency scan only.

retire --js Run scan of JavaScript files only.

--jspath <path> Folder to scan for javascript files

--nodepath <path> Folder to scan for node files

--path <path> Folder to scan for both

--jsrepo <path|url> Local or internal version of repo

--noderepo <path|url> Local or internal version of repo

--proxy <url> Proxy url (http://some.sever:8080)

--outputformat <format> Valid formats: text, json

--outputpath <path> File to which output should be written

--ignore <paths> Comma delimited list of paths to ignore

--ignorefile <path> Custom ignore file, defaults to .retireignore / .retireignore.json

--exitwith <code> Custom exit code (default: 13) when vulnerabilities are found

Conclusion

Dependency vulnerability management can be a very tedious, repetitive and time-consuming task if done manually. The above-mentioned tools can greatly increase both vulnerability visibility and mitigation without too much manual intervention. Here are some quick tips to help stay on top of these vulnerabilities:

- Set up Github PR security checks to catch vulnerabilities being introduced with a new code.

- Setup email alerts so you will be notified if a new vulnerability has been found in a module that you are using.

- Do regular vulnerability scans in your projects and modules.

- Test for vulnerabilities in Javascript libraries, not just Node.js modules.

- Report new vulnerabilities in your issue tracker so they won’t be forgotten about!

Remember that vulnerabilities will creep in over time and it will need to be managed. Security is an ongoing process, but active monitoring and alerts by using some automated tooling can go a long way to help you stay on top of these risks!

In upcoming posts, I’m going to look at attack and defense mechanisms for injection attacks in web & mobile applications and further automating security checks in the logic of the application, rather than in its dependencies.

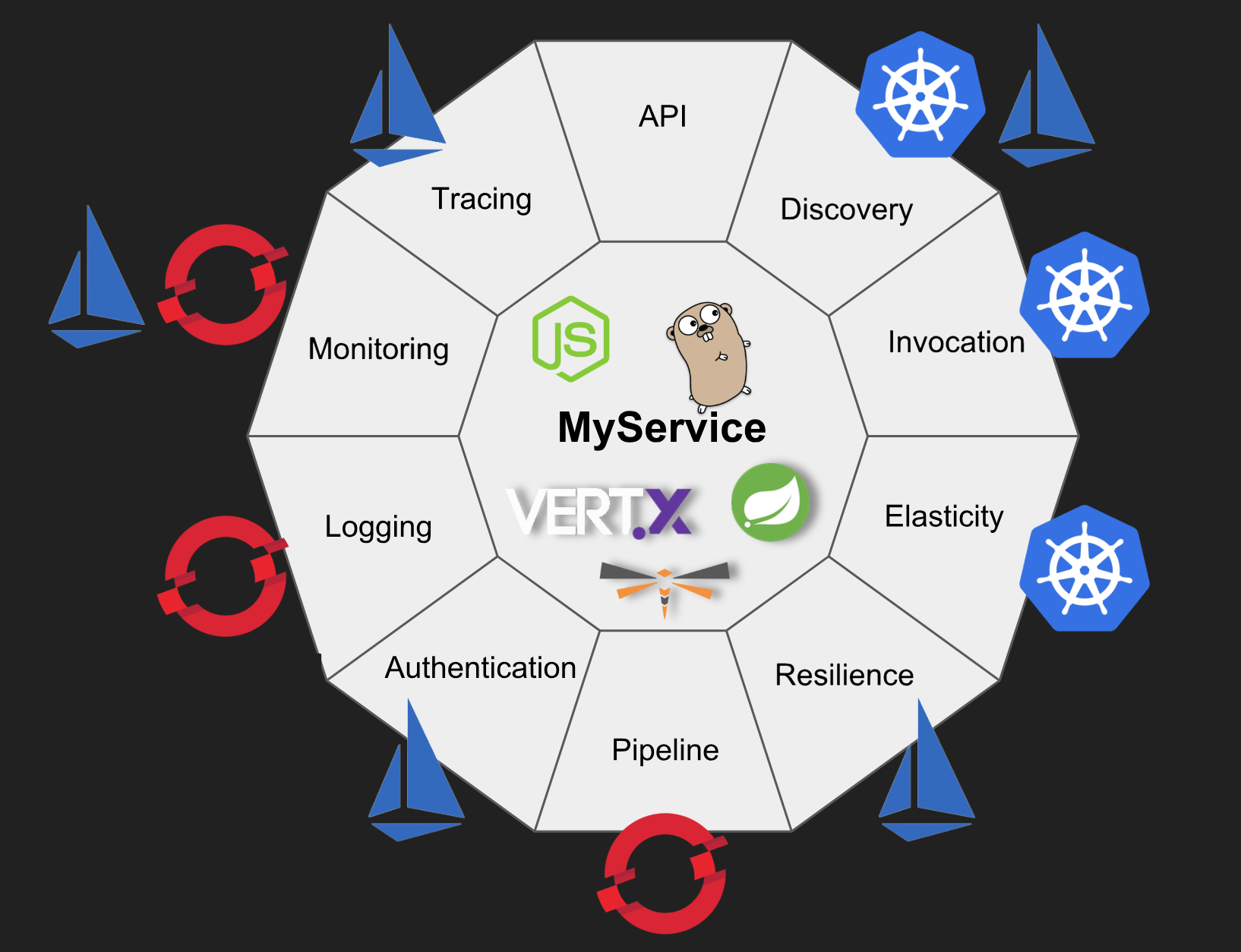

The Red Hat Mobile Application Platform is available for download.

NSP (

NSP (

Snyk (

Snyk (

Retire.js (

Retire.js (